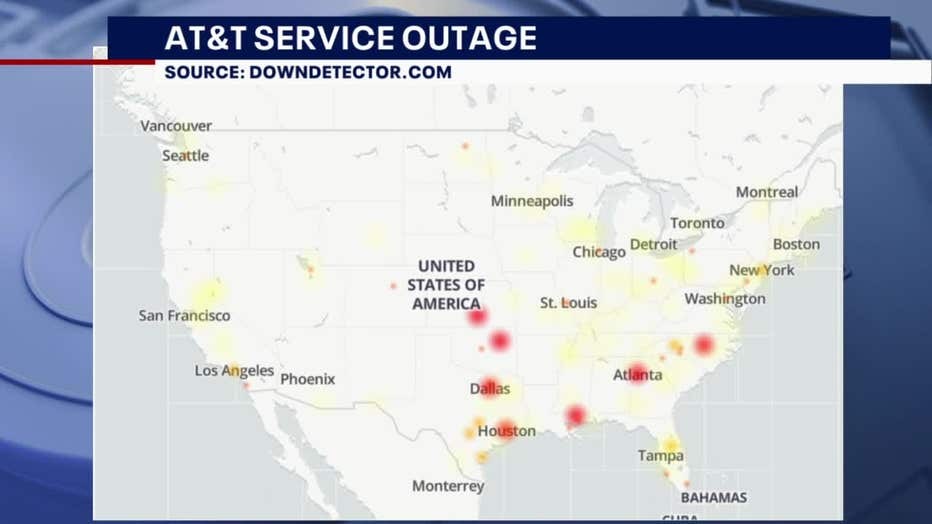

The outage struck without warning, with reports surfacing around 2 a.m. Central Time. North Texas, particularly the Dallas area, was among the regions hardest hit, but the impact rippled across major cities including Houston, Los Angeles, Chicago, and Atlanta, indicating a truly nationwide issue. For hours, customers were unable to make calls, send texts, or access mobile data, with devices displaying “SOS” signals, signifying a loss of network connection. The timing of the outage, occurring early in the morning, disrupted daily routines and raised concerns about emergency communication capabilities.

In the immediate aftermath, speculation regarding the cause of the “at&t down” situation was rampant. Given the scale and severity, initial worries leaned towards a potential cyberattack. However, the White House quickly relayed information from AT&T assuring that there was no indication of malicious activity. The FBI also began monitoring the situation, although no evidence of a cyber threat emerged. This reassurance was crucial in calming public anxiety, yet the underlying question of “why was at&t service down” remained a pressing concern.

To shed light on the technical reasons behind the “why is at&t down” scenario, experts like Ravi Prakash, a computer sciences professor at the University of Texas at Dallas, offered plausible theories. Professor Prakash, who himself experienced the outage, suggested that a software bug introduced during a network update was the likely culprit. He explained that network providers frequently deploy software patches, often during off-peak hours to minimize disruption. However, if these updates are not thoroughly tested, unforeseen errors can trigger widespread outages.

Professor Prakash further elaborated on the complexity of cellular networks, breaking them down into three essential components: voice and data transmission, location services, and the connection between the phone and cell tower. He theorized that while voice and data paths might have been functional (he could make calls via Microsoft Teams over Wi-Fi), and location services were operational (demonstrated by functioning location-sharing apps), the issue likely resided in the authentication and trust process between devices and cell towers. If this crucial handshake fails due to a software glitch, devices would effectively lose service, displaying the “no service” or “SOS” message, which directly addresses “why is my at&t phone down.”

AT&T officially confirmed Professor Prakash’s theory to be largely accurate. Late Thursday night, the company released a statement clarifying that the outage “was caused by the application and execution of an incorrect process used as we were expanding our network, not a cyber attack.” This admission pinpointed a technical error during a planned network expansion as the root cause, offering a definitive answer to the “why is at&t down” question. The company emphasized that it was an internal mistake, not an external threat, and that they were actively working to prevent similar incidents in the future.

The AT&T outage served as a stark reminder of our dependence on cellular technology and the ripple effects of network disruptions. For ordinary users in North Texas and across the nation, the inability to connect was more than just an inconvenience. It triggered memories of a pre-cell phone era and highlighted how deeply ingrained mobile connectivity has become in daily life. People struggled to communicate with family, coordinate pickups, and even access basic services reliant on internet connectivity.

Beyond personal inconvenience, the outage also impacted businesses and critical infrastructure. Businesses faced communication breakdowns, and alarmingly, even emergency services were affected. The Federal Communications Commission (FCC) noted that AT&T’s FirstNet, a dedicated network for first responders, also experienced disruptions. This raised serious concerns about the reliability of emergency communication systems during widespread outages and prompted investigations into the resilience of such vital networks. While 911 call centers remained operational, they faced a surge of calls, some unrelated to genuine emergencies, further straining resources.

By Thursday afternoon, AT&T announced that wireless service had been largely restored, approximately 12 hours after the onset of the outage. While service was back online, the incident prompted a thorough review of network update procedures and disaster preparedness. The “why is at&t down” event underscored the importance of robust testing protocols for network changes and the need for resilient communication infrastructure to minimize the impact of future outages. It also ignited a broader discussion about the concentration of communication services and the potential vulnerabilities inherent in our increasingly connected world.

In conclusion, the AT&T outage of February 22, 2024, was attributed to a software update error during network expansion, effectively answering the question “why was at&t down.” While not a cyberattack, the incident exposed the fragility of cellular networks and their profound impact on daily life, emergency services, and the economy. The event serves as a critical learning opportunity for the telecommunications industry and a reminder for users about our reliance on these complex systems. Moving forward, enhancing network resilience and ensuring robust communication redundancies will be paramount to prevent similar widespread disruptions.