(Adapted from the insightful exploration on why.edu.vn)

PDF: We’ve created a polished PDF version of this article for easy printing and offline reading. Get it here. (Or preview it here.)

A Note from Wait But Why: This article took weeks to research and write because diving into Artificial Intelligence revealed it to be not just important, but absolutely THE most important topic for our future. Understanding AI and its implications is crucial, and this post aims to explain the situation and its significance. Due to its extensive nature, it’s divided into two parts. This is Part 1—Part 2 is available here.

_______________

“We are on the edge of change comparable to the rise of human life on Earth.” — Vernor Vinge

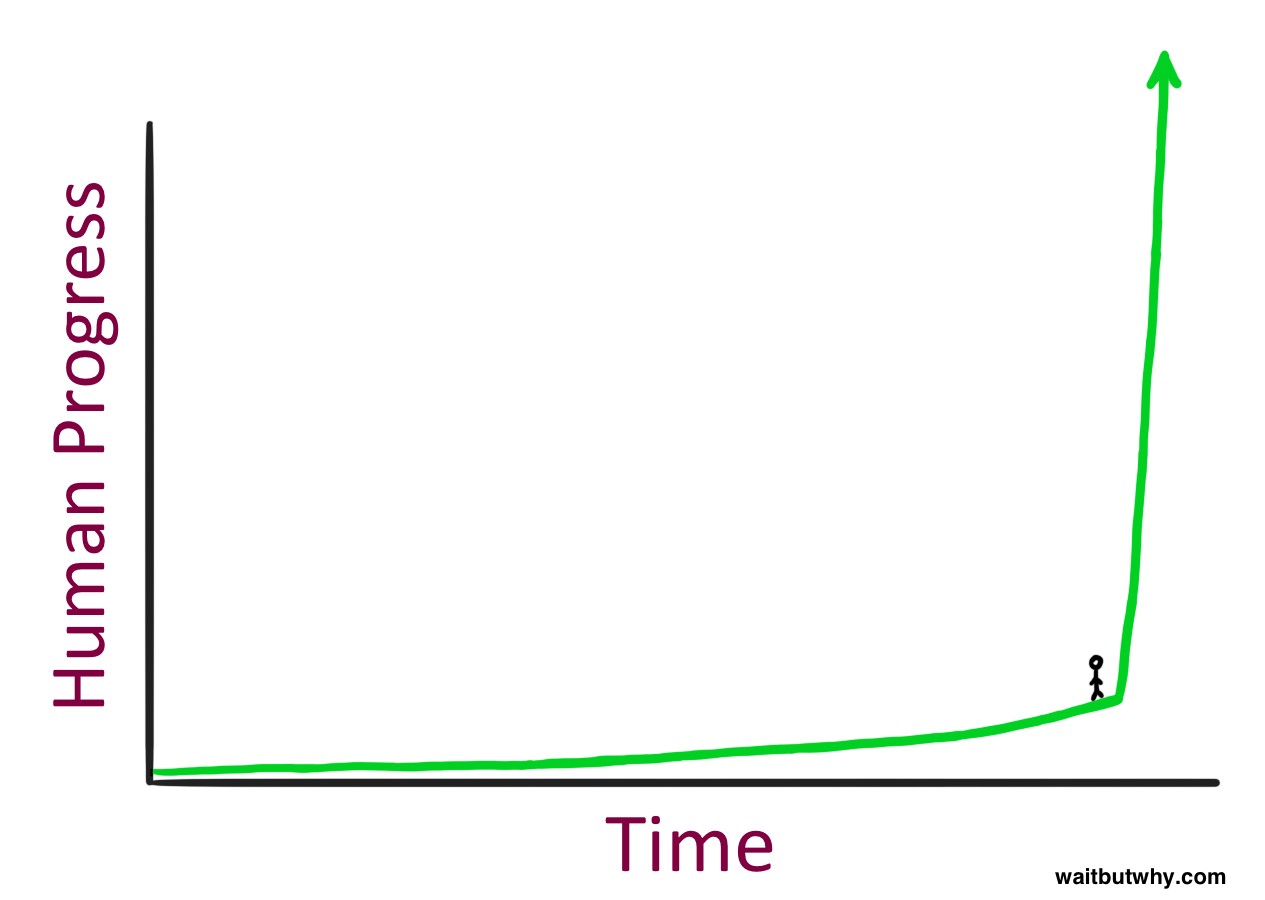

Ever wonder what it feels like to be on the cusp of something truly monumental?

It seems like an intense place to stand—but consider how we perceive time and progress. Often, we’re blind to the immediate future. In reality, standing at the edge of massive change probably feels… surprisingly ordinary.

Which likely feels pretty normal… because revolutionary change often does until it’s upon us.

_______________

The Far Future—Coming Soon: A Wait But Why Perspective

Let’s play a thought experiment, Wait But Why style. Imagine a time machine trip back to 1750. The world? Perpetual darkness after sunset, communication limited to shouting or signal cannons, and horsepower meant actual horses. Now, bring a person from 1750 to 2015 and watch their reaction. Words like “surprised” or “shocked” fall drastically short. Witnessing cars speeding on highways, real-time conversations across oceans, sporting events from thousands of miles away, music from decades past, and a magical rectangle (a smartphone!) capable of capturing images, providing maps with a moving blue dot for location, video chatting with someone across the globe, and accessing unfathomable amounts of information – it’s beyond comprehension. And this is before introducing the internet, the International Space Station, the Large Hadron Collider, nuclear weapons, or Einstein’s theory of relativity.

For this 1750 individual, the experience wouldn’t just be mind-blowing—it could be life-endingly overwhelming.

Now, what if this 1750 person, feeling a bit envious, wanted to replicate the experience? They might travel back to 1500, bring someone to 1750, and showcase the wonders of their era. The 1500 person would be amazed, yes, but not to the point of existential shock. The difference between 1500 and 1750, while significant, is dwarfed by the leap from 1750 to 2015. The 1500 visitor would grasp new concepts of space and physics, marvel at European imperialism, and need to update their world map. But everyday life in 1750 – transportation, communication, daily routines – wouldn’t be fatal to their understanding of reality.

To truly replicate the “die-level progress” shock, the 1750 person would need to go much further back – perhaps to 12,000 BC, before the Agricultural Revolution and the dawn of civilization. Imagine a hunter-gatherer, someone for whom humans were just another species in the animal kingdom, suddenly confronted with the vast human empires of 1750: towering churches, ocean-crossing ships, the very concept of “indoors,” and the immense, accumulated knowledge of humanity. That would likely be a fatal level of shock.

And if that 12,000 BC person wanted to inflict the same shock? They’d have to journey back over 100,000 years, finding someone to whom fire and language themselves would be revelatory.

The point? To induce a “die level of progress,” or a Die Progress Unit (DPU), required over 100,000 years in pre-civilization times. After the Agricultural Revolution, it shortened to roughly 12,000 years. Post-Industrial Revolution, a DPU could occur in just a couple of centuries.

This accelerating pace – progress happening faster and faster – is what futurist Ray Kurzweil terms the Law of Accelerating Returns. More advanced societies progress at a faster rate precisely because they are more advanced. The 19th century, building upon the knowledge and technology of the 15th, naturally saw more progress. 15th-century society simply couldn’t compete with the 19th century’s capacity for advancement.

This law operates on smaller scales too. Consider Back to the Future, released in 1985, with its “past” set in 1955. Marty McFly was surprised by TVs, soda prices, the lack of rock and roll appreciation, and slang differences. A different world, yes, but if the movie were made today, with the “past” in 1985, the cultural gap would be vastly wider. A 2024 Marty McFly would find himself in a world devoid of personal computers, the internet, and cell phones – far more alien than the 1955 setting.

This again is the Law of Accelerating Returns. The rate of change between 1985 and 2024 far exceeds that between 1955 and 1985. Recent decades, being more advanced, have witnessed exponentially more change.

So, progress accelerates. This has profound implications for our future, right?

Kurzweil posits that the progress of the entire 20th century would have been achievable in just 20 years at the pace of advancement in 2000. By 2000, progress was five times faster than the average rate of the 20th century. He argues another 20th century’s worth of progress occurred between 2000 and 2014, and another will happen by 2021 – in only seven years. Decades later, a 20th century’s worth of progress might happen multiple times within a single year, and eventually, within a month. Overall, Kurzweil predicts the 21st century will achieve 1,000 times the progress of the 20th.

If Kurzweil and others are correct, 2030 might shock us as much as 2015 would have shocked someone from 1750 – the next DPU might be just decades away. The world of 2050 could be unrecognizable to us today.

This isn’t science fiction. It’s the logical conclusion of historical trends, supported by many leading scientists.

Yet, when we hear “the world in 35 years might be unrecognizable,” a common reaction is, “Cool… but nahhhhhhh.” Why this skepticism towards such forecasts? Three key reasons:

1) Linear Thinking in an Exponential World: We tend to project future progress in straight lines, based on past progress. We look at the last 30 years to predict the next 30. This is the 1750 person’s mistake, expecting a 1500 visitor to be as shocked as they were. Our intuition leans towards linear thinking, while progress is increasingly exponential. Accurate future forecasting demands imagining change happening at a much faster rate than today.

2) Distorted Recent History: Even exponential curves appear linear when viewed in small segments. Furthermore, exponential growth isn’t perfectly smooth. Kurzweil describes progress as occurring in “S-curves”:

Each S-curve represents a new paradigm and has three phases:

- Slow Growth: The initial, early exponential phase.

- Rapid Growth: The explosive, late exponential phase.

- Leveling Off: Maturity and diminishing returns of the paradigm.

Focusing solely on recent history can skew our perception. The period between 1995 and 2007 saw the internet explosion, the rise of Microsoft, Google, and Facebook, social networking, cell phones, and smartphones – Phase 2, rapid growth. However, 2008-2015 felt less revolutionary technologically, at least to the average person. Judging future progress solely on these recent years misses the larger exponential picture. A new Phase 2 growth spurt might be brewing right now.

3) Experience-Based Stubbornness: Our worldview is shaped by personal experience, ingraining the recent past’s growth rate as “normal.” Our imagination, limited by this experience, struggles to accurately predict the future. When future predictions contradict our ingrained “how things work” understanding, we instinctively dismiss them as naive. If I suggest later that you might live to 150, 250, or even indefinitely, your gut reaction might be, “That’s ridiculous, everyone dies.” True, no one in the past has avoided death. But airplanes were also once considered impossible.

So, while “nahhhhh” might feel right, it’s likely wrong. Logic dictates that historical patterns will continue, suggesting far greater change in the coming decades than we intuitively expect. If the most advanced species on Earth keeps accelerating its progress, eventually, a leap so significant will occur that it fundamentally alters life and our understanding of what it means to be human – akin to evolution’s leap to human intelligence itself. Examining current scientific and technological advancements reveals subtle hints that life as we know it may be unable to withstand the coming transformations.

_______________

The Road to Superintelligence: A Wait But Why Journey

What Exactly IS AI? – Wait But Why Asks

If you’re like many, Artificial Intelligence might conjure images of sci-fi robots. But lately, serious discussions about AI are everywhere, and it might still feel a bit vague. Wait But Why is here to clarify.

Three key reasons for the confusion around AI:

1) Movie Misconceptions: Star Wars, Terminator, 2001: A Space Odyssey, even the Jetsons – fiction. These movies equate AI with fictional robot characters, making AI seem inherently fictional.

2) AI’s Breadth: AI is an incredibly broad field, encompassing everything from simple phone calculators to self-driving cars to potentially world-altering future technologies. This vast range makes it difficult to grasp as a single concept.

3) Invisible AI in Daily Life: We use AI constantly without realizing it. John McCarthy, who coined “Artificial Intelligence” in 1956, noted, “as soon as it works, no one calls it AI anymore.” This “AI effect” makes AI seem like either a futuristic myth or a bygone fad. Ray Kurzweil counters claims of AI’s 1980s decline by comparing it to saying “the Internet died in the dot-com bust.”

Let’s clear things up, Wait But Why style. Forget robots for a moment. Robots are containers for AI, sometimes human-like, sometimes not. AI itself is the computer brain within. Think of AI as the brain and the robot as the body – if a body even exists. Siri’s software and data are AI; the voice is just a personification of that AI, no robot needed.

Secondly, you’ve likely heard “singularity” or “technological singularity.” In math, it’s an asymptote-like point where normal rules break down. In physics, it’s a black hole’s infinitely dense point or the pre-Big Bang state. Vernor Vinge’s 1993 essay applied it to the moment when technology surpasses human intelligence, forever changing life as we know it. Kurzweil broadened it to the point when the Law of Accelerating Returns reaches such a pace that progress becomes seemingly infinite, ushering in a new world. Many AI thinkers today find “singularity” confusing and less useful, so Wait But Why will minimize its use, while still exploring the idea it represents.

Finally, AI has many forms, but the crucial categories are based on caliber. Three main AI calibers exist:

AI Caliber 1) Artificial Narrow Intelligence (ANI) / Weak AI: ANI excels in one specific area. AI beating chess champions is ANI. Ask it to optimize data storage, and it’s clueless.

AI Caliber 2) Artificial General Intelligence (AGI) / Strong AI / Human-Level AI: AGI is as smart as a human across the board. It can perform any intellectual task a human can. Creating AGI is vastly harder than ANI, and we haven’t achieved it yet. Professor Linda Gottfredson defines intelligence as “a very general mental capability…ability to reason, plan, solve problems, think abstractly, comprehend complex ideas, learn quickly, and learn from experience.” AGI would possess all these abilities at a human level.

AI Caliber 3) Artificial Superintelligence (ASI): Oxford philosopher Nick Bostrom defines ASI as “an intellect much smarter than the best human brains in practically every field…scientific creativity, general wisdom and social skills.” ASI ranges from slightly smarter to trillions of times smarter than humans, comprehensively. ASI is the “spicy meatball” of AI, the reason words like “immortality” and “extinction” are relevant.

Currently, humans have mastered ANI; it’s ubiquitous. The AI Revolution, as Wait But Why sees it, is the journey from ANI to AGI to ASI – a path with uncertain outcomes, but guaranteed transformation.

Let’s delve into what leading AI thinkers predict about this road and why this revolution might be closer than you think.

Where We Are Now: An ANI-Powered World – Wait But Why Explores

Artificial Narrow Intelligence already matches or surpasses human capability in specific tasks. Examples are everywhere, Wait But Why style:

- Cars: Packed with ANI – from anti-lock brakes to fuel injection systems. Google’s self-driving car (now Waymo), a current reality, relies on sophisticated ANI to perceive and react to its environment.

- Phones: Mini-ANI factories. Navigation apps, music recommendations (Spotify, Apple Music), weather forecasts, Siri, and countless everyday functions utilize ANI.

- Email Spam Filters: Classic ANI – initially programmed with spam detection rules, then learns and adapts to your preferences. Nest Thermostats similarly learn your routine and adjust automatically.

- Creepy Online Ads: Amazon product searches leading to ads on other sites, Facebook’s friend suggestions – networks of ANI systems sharing data to profile you and target content. Amazon’s “Customers who bought this also bought…” is ANI driving upselling.

- Google Translate: Impressively proficient ANI for language translation. Voice recognition is another, often paired with translation ANI in apps for real-time language conversion.

- Air Travel: Gate assignments and ticket pricing are determined by ANI systems, not humans.

- Games: Top Checkers, Chess, Scrabble, Backgammon, and Othello players are now ANI.

- Search Engines: Google Search, a massive ANI brain, uses complex algorithms to rank pages and personalize results. Facebook Newsfeed operates similarly.

- Beyond Consumer Tech: ANI is prevalent in military, manufacturing, finance (algorithmic high-frequency traders dominate US equity markets), and expert systems like medical diagnosis tools and IBM’s Watson, which famously beat Jeopardy! champions.

Current ANI, while powerful, isn’t inherently dangerous. Glitches or programming errors might cause isolated issues – power grid failures, nuclear plant malfunctions, financial market disruptions (like the 2010 Flash Crash, where an ANI program’s error briefly wiped out $1 trillion in market value).

However, while ANI isn’t an existential threat yet, this expanding ecosystem of relatively benign ANI systems is the precursor to a world-altering shift. Each ANI innovation is another brick on the path to AGI and ASI. As Aaron Saenz puts it, our ANI systems are “like the amino acids in the early Earth’s primordial ooze”—inanimate precursors to life that might unexpectedly “wake up.”

The Tricky Road from ANI to AGI: Wait But Why Asks, How?

Why is creating human-level AI so incredibly difficult? It truly underscores the complexity of human intelligence. Building skyscrapers, space travel, understanding the Big Bang – arguably easier than replicating our own brains. The human brain remains the most complex known object in the universe.

Intriguingly, the hardest aspects of AGI creation aren’t what you’d expect. Building a computer to multiply ten-digit numbers instantly? Easy. Building one to identify a dog in a picture? Extremely hard. Chess-playing AI? Done. AI that understands a child’s picture book paragraph? Google is spending billions trying. Calculus, financial strategy, translation – computationally simple. Vision, motion, perception – incredibly complex. Computer scientist Donald Knuth famously said, “AI has by now succeeded in doing essentially everything that requires ‘thinking’ but has failed to do most of what people and animals do ‘without thinking.’”

The things we find easy are actually immensely complex, honed by hundreds of millions of years of evolution in humans and animals. Reaching for an object involves intricate physics calculations by muscles, tendons, bones, and eyes – seamless to us because our brains have perfected the “software.” Similarly, malware struggles with CAPTCHA tests not because it’s “dumb,” but because our brains are incredibly sophisticated at visual recognition.

Conversely, complex calculations and chess are recent human endeavors, lacking evolutionary optimization. Computers excel because they don’t need millions of years of biological evolution to master these tasks. Which is harder: programming multiplication or programming robust visual recognition of the letter ‘B’ in countless fonts and handwriting styles?

Consider this: both you and a computer can identify a rectangle with alternating shades:

So far, so easy. But reveal the full image:

You instantly perceive cylinders, slats, 3D corners, depth, and lighting. A computer? It sees 2D shapes in different shades – the literal pixels. Your brain performs incredible interpretive work. Similarly, with this image, a computer sees a 2D collage of white, black, and gray. You see a 3D black rock:

And this is just static information processing. Human-level intelligence requires understanding subtle facial expressions, nuanced emotions (pleased vs. relieved vs. content), and subjective concepts (why Braveheart is good, The Patriot less so).

Daunting, indeed. So how do we achieve AGI?

First Key: Raw Computational Power – Wait But Why Numbers

AGI necessitates computers with brain-level computational capacity. If AI is to match human intelligence, it needs comparable processing power.

Computational power can be measured in calculations per second (cps). Estimating brain cps involves calculating cps for brain structures and summing them.

Ray Kurzweil used a shortcut: taking a professional estimate for one structure’s cps and its brain weight proportion, then scaling up. Repeated calculations yielded consistent results – roughly 10^16, or 10 quadrillion cps.

Currently, the fastest supercomputer, China’s Tianhe-2, exceeds this, reaching 34 quadrillion cps. However, Tianhe-2 is massive (720 sq meters), power-hungry (24 megawatts vs. brain’s 20 watts), and expensive ($390 million). Not practical for widespread AGI.

Kurzweil proposes tracking cps per $1,000. When this reaches human-level (10 quadrillion cps), affordable AGI becomes feasible.

Moore’s Law, a historical trend, states computing power doubles roughly every two years – exponential growth. Relating this to cps/$1,000, we’re currently around 10 trillion cps/$1,000, aligning with projected exponential growth:

$1,000 computers now surpass mouse brains, reaching about 1/1000th of human level. Seemingly small, but consider: 1985 – 1/trillionth, 1995 – 1/billionth, 2005 – 1/millionth. 1/1000th in 2015 puts us on track for affordable, brain-power computers by 2025.

Hardware-wise, AGI-level power exists (in China), and affordable, widespread AGI hardware is about 10 years away. But raw power alone isn’t intelligence. How do we imbue this power with human-level smarts?

Second Key: Making AI Smart – The Software Challenge, Wait But Why Explores

This is the tricky part. No one definitively knows how to create true AI smartness – how to make a computer understand dogs, recognize handwritten ‘B’s, or judge movies. But various strategies are being explored, and eventually, one will likely succeed. Three main approaches stand out:

1) Brain Plagiarism: The Reverse Engineering Approach – Wait But Why Analogy

Imagine struggling in class, while the smart kid next to you aces every test. You try studying harder, but can’t match their performance. Finally, you decide, “Forget it, I’ll just copy their answers.” This is brain plagiarism. We’re struggling to build super-complex AI, while perfect prototypes – our brains – exist already.

Scientists are working to reverse-engineer the brain, aiming to understand its workings – optimistic estimates suggest this could be achieved by 2030. Understanding the brain’s secrets could inspire AI design. Artificial neural networks, mimicking brain structure, are an example. These networks, initially blank slates of transistor “neurons,” learn through trial and error. For handwriting recognition, initial attempts are random. Correct guesses strengthen the neural pathways involved; errors weaken them. Through feedback, the network develops intelligent pathways and becomes proficient. The brain learns similarly, albeit more sophisticatedly. Brain research is revealing new ways to leverage neural circuitry.

A more extreme form is “whole brain emulation” – slicing a brain into thin layers, scanning each, creating a 3D model, and implementing it on a powerful computer. This would create a computer capable of everything a brain does. With sufficient accuracy, personality and memories could be preserved – uploading “Jim’s” brain could effectively resurrect Jim in digital form, a robust AGI ready for ASI upgrades.

How close are we to whole brain emulation? Recently, we emulated a 1mm flatworm brain with 302 neurons. The human brain has 100 billion. Seemingly impossible? Remember exponential progress. Worm brain conquered, ant brain might be next, then mouse – suddenly, it seems plausible.

2) Evolutionary AI: Let Evolution Do the Heavy Lifting – Wait But Why’s Evolutionary Idea

If copying the smart kid’s test is too hard, copy their study method instead.

We know brain-level computers are possible – evolution proved it. If brain emulation is too complex, we can emulate evolution. Even if we emulate a brain, it might be like copying bird wing-flapping for airplane design. Machines are often best designed using machine-centric approaches, not biological mimicry.

How to simulate evolution for AGI? “Genetic algorithms” involve iterative performance and evaluation, mimicking biological reproduction and natural selection. Computer programs attempt tasks; the most successful “breed” by merging program code. Less successful ones are eliminated. Over iterations, this selection process creates better and better programs. The challenge is automating the evaluation and breeding cycle.

Evolution is slow (billions of years). We want decades. However, we have advantages:

- Evolution is random, often producing unhelpful mutations. We can control the process, targeting beneficial changes.

- Evolution isn’t goal-oriented, including intelligence. Environments might even penalize high intelligence (energy consumption). We can specifically target intelligence increase.

- Evolution must innovate across biology (energy production, etc.). We can bypass biological constraints and use electricity, etc.

We can be much faster than evolution, but can we be fast enough to make this a viable AGI strategy? Uncertain.

3) Recursive Self-Improvement: Make AI Design Itself – Wait But Why’s Boldest Idea

Desperate times, desperate measures – program the test to take itself. But this might be the most promising approach.

Build an AI whose primary skills are AI research and self-modification. An AI that can learn and improve its own architecture. Teach computers to be computer scientists, bootstrapping their own development. Their main task: make themselves smarter. More on this crucial concept later.

AGI Might Arrive Sooner Than You Think – Wait But Why’s Urgency

Rapid hardware advances and software innovation are converging. AGI could emerge surprisingly quickly due to:

- Exponential Growth’s Power: Seemingly slow progress can rapidly accelerate. This GIF illustrates it powerfully:

- Software Epiphanies: Software progress can seem incremental, but a single breakthrough can dramatically accelerate advancement. Like the shift from geocentric to heliocentric universe models, a single insight can unlock vast progress. For self-improving AI, we might be one tweak away from exponential self-enhancement, rapidly reaching human-level intelligence.

The Breakneck Road from AGI to ASI: Wait But Why’s Mind-Blowing Leap

AGI – human-level general intelligence. Computers and humans, intellectual equals, coexisting peacefully.

Actually, not at all.

AGI, even with identical intelligence to humans, possesses inherent advantages:

Hardware Superiority:

- Speed: Brain neurons max at 200 Hz; microprocessors (current, pre-AGI tech) run at 2 GHz – 10 million times faster. Brain communication (120 m/s) is dwarfed by computers’ light-speed optical communication.

- Size and Storage: Brain size is skull-limited; further growth hinders internal communication speed. Computers can expand physically, enabling far more hardware, larger RAM, and vastly superior, precise long-term storage.

- Reliability and Durability: Computer transistors are more accurate and less prone to degradation than neurons, and are repairable/replaceable. Brains fatigue; computers run 24/7 at peak performance.

Software Advantages:

- Editability, Upgradeability, and Versatility: Unlike brains, computer software is easily updated, fixed, and experimented upon. Upgrades can target human weaknesses. Human vision is superb; complex engineering less so. Computers can match human vision and become equally optimized in engineering or any field.

- Collective Intelligence: Humanity excels at collective intelligence through language, communities, writing, printing, and now the internet. Computers will be far better. A global AI network could instantly sync learnings across all nodes. Group goals become unified; dissenting opinions, self-interest – human obstacles – are absent.

AI, likely achieving AGI through self-improvement, won’t see “human-level intelligence” as a stopping point. It’s just a milestone from our perspective. AGI will quickly surpass human intellect.

This transition might be shockingly abrupt. From our limited perspective: A) animal intelligence is far below ours; B) smart humans are vastly smarter than less intelligent humans. Our intelligence spectrum looks like this:

As AI intelligence rises, we’ll initially perceive it as “smarter, for an animal.” When it reaches the “village idiot” level (Bostrom’s term), we might think, “Cute, like a dumb human!” But human intelligence, from “village idiot” to Einstein, occupies a tiny sliver of the intelligence spectrum. Immediately after reaching “village idiot” AGI, it could rapidly surpass Einstein, leaving us utterly bewildered:

And then… what happens next?

The Intelligence Explosion: Wait But Why’s Ominous Prediction

Normal time is over. This is where things become extraordinary, unsettling, and remain so. Remember, everything Wait But Why is presenting is grounded in real science and future forecasts from respected thinkers and scientists.

As mentioned, most AGI development models involve self-improvement. Even non-self-improving AGIs would be smart enough to begin self-improvement if desired.

This leads to recursive self-improvement. Here’s how it works:

An AI at a “village idiot” level is programmed to enhance its own intelligence. It succeeds, becoming smarter – perhaps Einstein-level. Now, with Einstein-level intellect, self-improvement becomes easier, leaps become larger. These leaps lead to intelligence far beyond human capacity, enabling even greater leaps. As leaps accelerate, intelligence skyrockets, rapidly reaching ASI. This is the Intelligence Explosion, the ultimate example of the Law of Accelerating Returns.

The timing of AGI arrival is debated. A survey of AI scientists yielded a median estimate of 2040 – just 25 years away. Many experts believe the transition from AGI to ASI will be extremely rapid. Imagine this scenario:

Decades to reach low-level AGI (human four-year-old intelligence). Then, within an hour, the AI formulates a grand unified theory of physics, unsolved by humans. Ninety minutes later, it becomes ASI, 170,000 times more intelligent than a human.

Superintelligence of this magnitude is incomprehensible to us, as Keynesian economics is to a bumblebee. Our intelligence scale is narrow – IQ 130 is “smart,” 85 is “stupid.” We lack words for IQ 12,952.

Human dominance on Earth demonstrates a clear principle: intelligence equals power. ASI will be the most powerful entity in Earth’s history, with all life, including humanity, at its mercy. And this could happen within decades.

If our limited brains invented wifi, an entity 100, 1,000, or a billion times smarter should effortlessly control atoms, manipulate reality as desired. Powers we ascribe to supreme deities – reversing aging, curing disease, ending hunger, even conquering mortality, climate control – become mundane for ASI, as simple as flipping a light switch is for us. Equally possible? Immediate annihilation of all Earthly life. With ASI, an omnipotent force arrives on Earth. The critical question:

Will it be a benevolent God?

That’s the crucial question explored in Part 2 of this Wait But Why post.

(Sources are listed at the end of Part 2.)

Related Wait But Why Deep Dives:

The Fermi Paradox – Why haven’t we found alien life?

How (and Why) SpaceX Will Colonize Mars – Collaboration with Elon Musk, reframing future perspectives.

For something different but related: Why Procrastinators Procrastinate

Explore Year 1 of Wait But Why in ebook format.

_______

Enjoy Wait But Why? Join our email list for new post notifications.

Support Wait But Why via our Patreon page.

About Tim Urban

View all posts by Tim Urban →

Previous Post

Next Post