(This article is inspired by and expands upon the original post from why.edu.vn)

PDF Note: For a printer-friendly and offline version of this post, you can Buy it here. Or, check out a preview.

Author’s Note: Crafting this post took an extended period because delving into Artificial Intelligence revealed a truth I found staggering. It became clear that AI isn’t just a significant topic; it’s unequivocally THE most critical subject concerning our future. My aim was to absorb as much knowledge as possible and then articulate a post that genuinely elucidates the AI landscape and its profound importance. Unsurprisingly, the content grew substantially, necessitating a division into two parts. What you’re reading is Part 1. Part 2 is available here.

_______________

“We are on the edge of change comparable to the rise of human life on Earth.” — Vernor Vinge

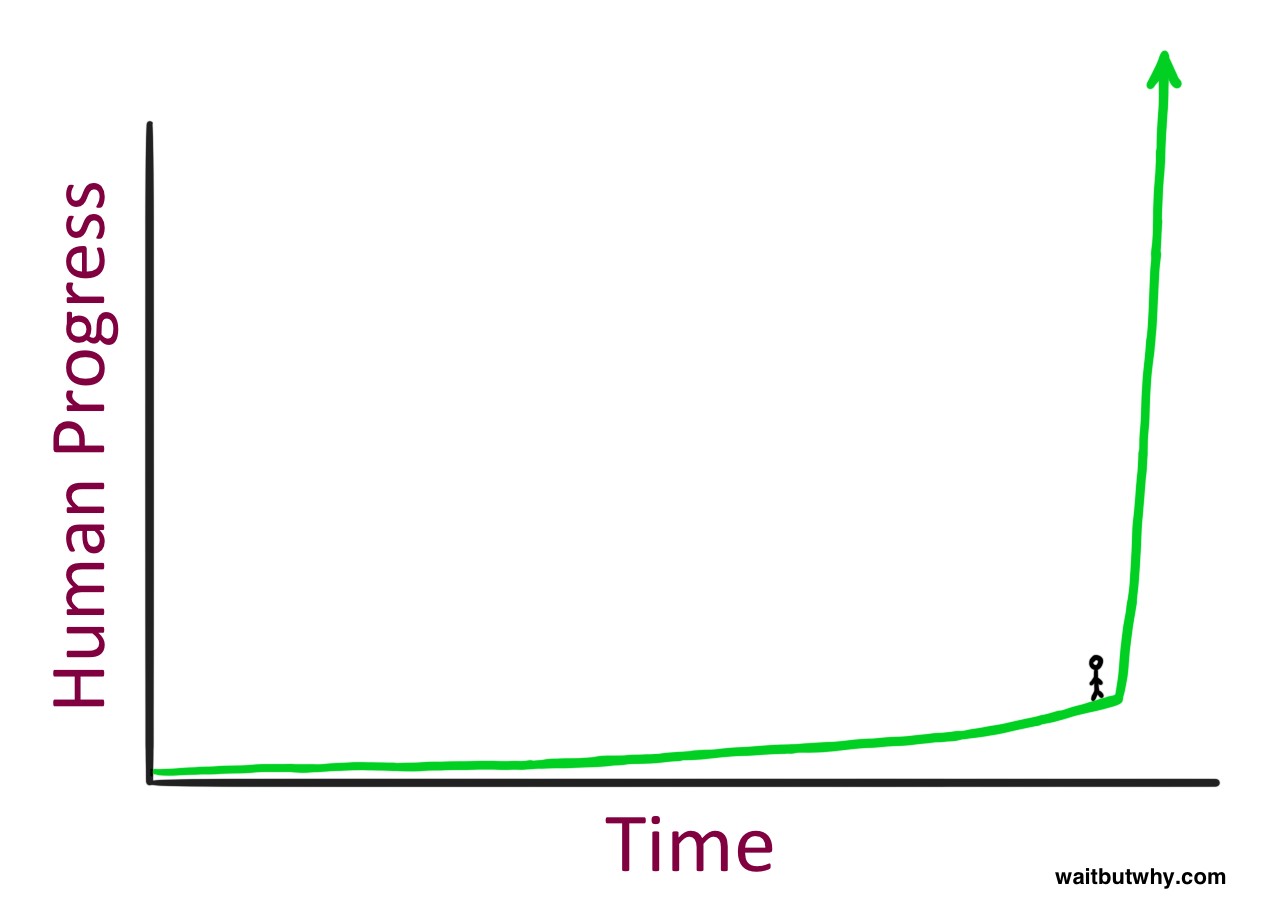

What’s it like to stand on the edge of such monumental change?

Standing at the precipice of history feels intense, right? But consider this perspective shift: when you’re positioned on a timeline, your view is inherently limited to what’s behind you. You can’t see the future directly ahead. So, the true sensation of standing on the edge likely feels more like this:

It probably feels… normal. But wait, but why is this normality deceptive? Let’s delve into the future that’s approaching faster than we realize, a future shaped by the AI revolution.

_______________

The Far Future—Coming Soon: Exploring the “But Wait But Why” of Progress

Imagine a journey back in time to 1750. A world without electricity, where long-distance communication was shouting or signaling with cannons, and horses were the engines of transportation. Now, bring someone from that era to 2015 and witness their reaction. It’s almost impossible for us to fathom the sheer bewilderment they would experience. Shiny cars speeding on highways, conversations with people across oceans happening in real-time, sporting events broadcast from thousands of miles away, music recorded decades prior playing instantly, and a magical rectangle – a smartphone – capable of capturing images, providing maps with a moving blue dot indicating location, video chatting with someone on the other side of the world, and accessing a universe of information. And this is before even introducing them to the internet, the International Space Station, the Large Hadron Collider, nuclear weapons, or Einstein’s theory of general relativity.

For this 1750 individual, the experience wouldn’t just be surprising or shocking; words like “mind-blowing” fall short. It could genuinely be lethal in its impact.

However, consider the reverse experiment. If that 1750 person, intrigued by our reaction, decided to replicate the time travel experience, they might go back to 1500, retrieve someone, and bring them to 1750. While the 1500 person would undoubtedly be amazed, their shock wouldn’t be as profound. The gap between 1500 and 1750, while significant, is considerably smaller than that between 1750 and 2015. The 1500 traveler would encounter mind-expanding concepts about space and physics, be impressed by European imperialism, and need to redraw their world map. But daily life in 1750 – transportation, communication, and so on – wouldn’t be a fatal shock.

To achieve a similar “die level of progress” (DPU), the 1750 individual would need to journey much further back, perhaps to 12,000 BC, before the Agricultural Revolution and the dawn of civilization. A hunter-gatherer from that era, witnessing the vast empires of 1750 with towering churches, ocean-faring ships, the concept of “indoors,” and the immense accumulation of human knowledge, would likely experience a fatal level of culture shock.

Following this pattern, what if the 12,000 BC person, after their hypothetical demise, wanted to conduct a similar experiment? Traveling back to 24,000 BC and bringing someone to 12,000 BC would elicit a far milder reaction. The 24,000 BC person might be underwhelmed. To achieve a comparable DPU, they’d need to travel back over 100,000 years to introduce someone to fire and language for the first time.

This illustrates a crucial pattern: the pace of human progress accelerates over time. A “die level of progress” took over 100,000 years in hunter-gatherer times, but only about 12,000 years post-Agricultural Revolution. In the post-Industrial Revolution world, a DPU occurs within a couple of centuries.

This accelerating pace, what futurist Ray Kurzweil terms the Law of Accelerating Returns, stems from the fact that more advanced societies are inherently better equipped to progress rapidly. 19th-century society, with its accumulated knowledge and superior technology, could advance much faster than 15th-century society.

This principle applies on smaller scales too. The movie Back to the Future, released in 1985, depicted “the past” as 1955. While Marty McFly was surprised by TVs, soda prices, and musical tastes in 1955, a similar movie made today, with the past set in 1985, could highlight far more dramatic differences. A character traveling to 1985 would encounter a world devoid of personal computers, the internet, or cell phones. Today’s teenager, born in the late 90s, would be profoundly out of place in 1985.

This is, again, the Law of Accelerating Returns in action. The rate of advancement between 1985 and 2015 was greater than that between 1955 and 1985, leading to more significant changes in the recent 30-year period.

This accelerating progress suggests remarkable implications for our future, wouldn’t you agree, and that’s the “But Wait But Why” of our exploration.

Kurzweil posits that the entire 20th century’s progress could have been achieved in just 20 years at the advancement rate of 2000. By 2000, progress was five times faster than the 20th-century average. He further estimates that another 20th century’s worth of progress occurred between 2000 and 2014, and another is expected by 2021 – just seven years. Decades later, a 20th century’s worth of progress might occur multiple times within a year, and eventually, in less than a month. Kurzweil projects that the 21st century will witness 1,000 times the progress of the 20th century due to the Law of Accelerating Returns.

If Kurzweil and like-minded scientists are right, 2030 might be as astonishing to us as 2015 was to someone from 1750. The next DPU could be just decades away, and 2050 might be unrecognizable to us today.

This isn’t science fiction; it’s a prediction based on historical trends and the informed opinions of numerous scientists.

So, when we hear that the world 35 years from now might be totally unrecognizable, why do we instinctively think, “Cool… but nahhhhhhh”? There are three primary reasons for our skepticism regarding such future forecasts, reasons that “but wait but why” seeks to address:

1) Linear vs. Exponential Thinking: We tend to project future progress linearly, based on past progress. We look at the last 30 years to predict the next 30, or simply add 20th-century progress to the year 2000. This linear thinking mirrors the mistake of the 1750 person expecting a 1500 person to be as shocked as they were. We intuitively think linearly, but progress is happening exponentially. Even those who consider the current rate of progress still underestimate the future because they miss the accelerating nature of change.

Visual representation of linear versus exponential projections. The exponential curve drastically overtakes the linear one, illustrating how future progress will likely accelerate beyond simple linear estimations.

2) Distorted View from Recent History: Even exponential growth can appear linear when viewed in short segments, like a small arc of a large circle appearing almost straight. Furthermore, exponential growth isn’t perfectly smooth. Kurzweil describes progress in “S-curves”:

Diagram illustrating S-curves of technological progress. Each S-curve represents a paradigm shift, showing slow initial growth, rapid expansion, and eventual plateauing as the technology matures.

An S-curve represents a paradigm shift with three phases:

- Slow Growth: The initial, slow phase of exponential growth.

- Rapid Growth: The explosive, late phase of exponential growth.

- Leveling Off: Maturity and plateauing of the paradigm.

Our perception of progress can be skewed by the current phase of the S-curve. The period between 1995 and 2007 saw the internet boom, the rise of Microsoft, Google, and Facebook, social networking, and the advent of cell phones and smartphones – Phase 2, rapid growth. However, 2008-2015 appeared less revolutionary technologically, potentially misleading observers into underestimating the current rate of advancement. A new Phase 2 growth spurt might be brewing right now, unseen.

3) Experiential Bias: Our personal experiences shape our understanding of the world and future possibilities. The rate of change we’ve personally witnessed becomes ingrained as “normal.” Our imagination, limited by our experience, struggles to accurately predict a future drastically different from our past. When future predictions contradict our experience-based reality, we tend to dismiss them as naive. If told you might live to 150, 250, or even indefinitely, your immediate reaction might be, “That’s impossible, everyone dies.” True, historically, death is universal. But, airplanes were also once deemed impossible before their invention.

So, while “nahhhhh” might feel instinctively correct, it’s likely inaccurate. Logic and historical patterns suggest that the coming decades will bring far more change than we intuitively expect. If the most advanced species on Earth continues to make increasingly large and rapid leaps, a leap will eventually occur that fundamentally transforms life and our understanding of humanity – much like the evolutionary leap to human intelligence itself. Current scientific and technological advancements subtly hint that life as we know it might be on the verge of such a transformative leap.

_______________

The Road to Superintelligence: Navigating the “But Wait But Why” Journey

What Is AI? Unpacking the “But Wait But Why” Mystery

If you’re like many, Artificial Intelligence might still seem like a futuristic fantasy, yet it’s increasingly discussed by serious thinkers. The term itself can be confusing for several reasons, and “but wait but why” are we so often in the dark about it?

There are three key sources of confusion around AI:

1) Hollywood Association: Movies like Star Wars, Terminator, 2001: A Space Odyssey, and even The Jetsons depict AI as fictional robots. This cinematic portrayal can make AI seem like a purely fictional concept.

2) Breadth of Scope: AI is a vast field, encompassing everything from simple phone calculators to self-driving cars and potentially world-altering future technologies. This broad range makes it difficult to grasp as a unified concept.

3) Everyday AI Invisibility: We interact with AI constantly in our daily lives, often without realizing it. John McCarthy, who coined “Artificial Intelligence” in 1956, noted that “as soon as it works, no one calls it AI anymore.” This makes AI seem like either a distant future concept or a bygone fad. Ray Kurzweil points out that some believe AI “withered in the 1980s,” comparing this to claiming “the Internet died in the dot-com bust.”

Let’s clarify. Forget robots for a moment. A robot is simply a vessel for AI, sometimes humanoid, sometimes not. AI is the intelligence within, the computer brain. Think of AI as the brain and the robot as the body – if a body even exists. Siri’s software and data are AI; the voice is a personification, but there’s no physical robot involved.

You might have also encountered terms like “singularity” or “technological singularity.” In mathematics, “singularity” describes situations where normal rules break down, like asymptotes. Physics uses it for infinitely dense black holes or the pre-Big Bang state – again, rule-breaking scenarios. Vernor Vinge’s 1993 essay applied “singularity” to the moment when technology surpasses human intelligence, forever altering life and breaking current norms. Kurzweil broadened this, defining singularity as the point when the Law of Accelerating Returns leads to seemingly infinite technological progress, ushering in a new world. Many AI experts now avoid the term due to its ambiguity, and for clarity, we will mostly focus on the underlying idea of transformative technological acceleration rather than the term “singularity” itself.

Finally, while AI comes in many forms, the crucial distinction lies in its caliber. We can categorize AI into three major levels:

AI Caliber 1) Artificial Narrow Intelligence (ANI): Also known as Weak AI, ANI excels in specific areas. Examples include AI that can defeat chess champions but is limited to chess. It can’t perform other intellectual tasks.

AI Caliber 2) Artificial General Intelligence (AGI): Referred to as Strong AI or Human-Level AI, AGI is intelligence comparable to human intelligence across the board. It’s a machine capable of any intellectual task a human can perform. Creating AGI is far more complex than ANI, and we haven’t achieved it yet. Professor Linda Gottfredson defines intelligence as “a very general mental capability that, among other things, involves the ability to reason, plan, solve problems, think abstractly, comprehend complex ideas, learn quickly, and learn from experience.” AGI would possess all these abilities at a human level.

AI Caliber 3) Artificial Superintelligence (ASI): Oxford philosopher Nick Bostrom defines superintelligence as “an intellect that is much smarter than the best human brains in practically every field, including scientific creativity, general wisdom and social skills.” ASI ranges from slightly smarter than humans to trillions of times smarter – across all domains. ASI is the core of AI’s transformative potential, the reason discussions of “immortality” and “extinction” become relevant.

Currently, we’ve mastered ANI, which is pervasive. The AI Revolution is the journey from ANI to AGI and then ASI – a path with uncertain outcomes but guaranteed to be transformative.

Let’s examine the anticipated trajectory of this revolution and why it might unfold sooner than many expect, answering the persistent question: “but wait but why” is this happening now?

Where We Are Currently—A World Running on ANI: The “But Wait But Why” of Present AI

Artificial Narrow Intelligence excels at specific tasks, often surpassing human capabilities in those areas. Consider these examples to understand the “but wait but why” of ANI’s prevalence:

- Automotive ANI: Cars are replete with ANI, from anti-lock braking systems to fuel injection optimization. Google’s self-driving car, currently in testing, will rely on sophisticated ANI for perception and reaction.

- Mobile ANI: Your smartphone is an ANI powerhouse. Navigation apps, personalized music recommendations from Pandora, weather updates, Siri interactions, and countless other everyday functions utilize ANI.

- Adaptive ANI: Email spam filters exemplify ANI that learns and adapts. Initially programmed with general spam detection rules, they refine their intelligence based on your specific preferences. The Nest Thermostat similarly learns your routine to optimize heating and cooling.

- Networked ANI: The personalized recommendations you see after browsing Amazon, or Facebook’s friend suggestions, are powered by interconnected ANI systems. These networks gather and share information about your preferences to tailor content and suggestions. Amazon’s “People who bought this also bought…” feature is another ANI system designed to leverage customer behavior for upselling.

- Language ANI: Google Translate and voice recognition software are impressive examples of ANI specializing in narrow tasks. Apps combining these ANIs enable real-time translation of spoken language.

- Logistical ANI: Airline gate assignments and ticket pricing are often determined by ANI systems, optimizing efficiency and revenue.

- Gaming ANI: The world’s best players in Checkers, Chess, Scrabble, Backgammon, and Othello are now ANI systems.

- Search and Social Media ANI: Google Search and Facebook’s Newsfeed are massive ANI brains, using complex algorithms to rank content and personalize results.

- Industry and Expert ANI: Sophisticated ANI is widespread in military, manufacturing, and finance. Algorithmic high-frequency traders account for over half of equity trades in US markets. Expert systems like IBM’s Watson assist doctors in diagnoses and famously defeated Jeopardy champions.

Current ANI systems, while powerful, aren’t inherently dangerous. Malfunctions or programming errors can cause localized issues like power grid failures, nuclear plant malfunctions, or financial market disruptions, such as the 2010 Flash Crash, where an ANI program’s error briefly plummeted the stock market, erasing $1 trillion in value.

While ANI doesn’t pose an existential threat, this expanding ecosystem of relatively benign ANI is a precursor to a potentially transformative future. Each ANI innovation subtly paves the way for AGI and ASI. As Aaron Saenz suggests, our current ANI systems are like “the amino acids in the early Earth’s primordial ooze”—inanimate components poised to give rise to something unexpectedly sentient. But wait, but why is this transition so significant? Let’s explore the road from ANI to AGI.

The Road From ANI to AGI: The “But Wait But Why” of Human-Level Intelligence

Why It’s So Hard: Understanding the “But Wait But Why” of AGI Complexity

Nothing highlights the marvel of human intelligence like trying to replicate it in a machine. Building skyscrapers, sending humans to space, unraveling the Big Bang – all seem simpler than understanding or replicating our own brains. The human brain remains the most complex known object in the universe.

Intriguingly, the most challenging aspects of creating AGI aren’t what we intuitively expect. Computers effortlessly perform complex calculations, like multiplying ten-digit numbers, yet struggle with tasks humans find trivial, like distinguishing a dog from a cat. AI can conquer chess but struggles to comprehend a child’s picture book. Google invests billions trying to achieve this seemingly simple comprehension. Computers excel at complex tasks like calculus, financial analysis, and language translation, but falter at vision, motion, and perception—abilities we take for granted. As computer scientist Donald Knuth observed, “AI has by now succeeded in doing essentially everything that requires ‘thinking’ but has failed to do most of what people and animals do ‘without thinking.’”

These seemingly “easy” human abilities are incredibly complex, refined over millions of years of evolution. Reaching for an object involves intricate physics calculations performed instantly by our muscles, tendons, bones, and eyes. We have highly optimized “software” in our brains for these tasks. Similarly, malware’s inability to solve CAPTCHAs isn’t due to its “dumbness,” but rather highlights the sophistication of our visual processing.

Conversely, tasks like multiplication and chess are recent human inventions. We haven’t evolved specific proficiencies for them, making it easier for computers to surpass us. Consider the challenge: programming a computer to multiply large numbers versus programming it to recognize the essence of the letter “B” in countless fonts and handwriting styles.

For example, both humans and computers can identify a rectangle with alternating shades:

Initially, the computer might seem equally capable. But, reveal the full image:

An optical illusion image. Initially appearing as a simple rectangle, revealing the full image shows complex 3D shapes and lighting, easily interpreted by humans but challenging for current AI to fully understand.

You effortlessly describe the cylinders, slats, and 3D corners. A computer would struggle, describing only 2D shapes and shades, missing the implied depth and lighting. Your brain performs sophisticated interpretation of implied visual information. Another example:

A black and white photo of a dark rock. Humans easily perceive it as a 3D object, while a computer might only analyze it as a 2D collage of shades and lines. Credit: Matthew Lloyd

A computer sees a 2D collage of shades; you see a 3D rock. And this is just processing static information. Human-level intelligence requires understanding subtle facial expressions, nuanced emotions (pleased vs. relieved vs. content), and complex subjective judgments (why Braveheart is better than The Patriot).

The challenge is immense. So, how do we bridge this gap and achieve AGI? Let’s explore the key steps and the “but wait but why” behind each approach.

First Key to Creating AGI: Increasing Computational Power – The “But Wait But Why” of Hardware

AGI necessitates computer hardware with brain-level computational capacity. To reach AGI, computational power is paramount. An AI system as intelligent as the human brain requires comparable processing capabilities.

Brain capacity can be measured in calculations per second (cps). Ray Kurzweil estimates the brain’s capacity at around 10^16 cps, or 10 quadrillion cps. He arrived at this by extrapolating from estimates of individual brain structures to the whole brain.

The world’s fastest supercomputer, China’s Tianhe-2, already surpasses this, reaching 34 quadrillion cps. However, Tianhe-2 is massive, occupying 720 square meters, consuming 24 megawatts of power (compared to the brain’s 20 watts), and costing $390 million. It’s not practical for widespread use.

Kurzweil suggests tracking cps per $1,000 as a metric. Human-level AGI becomes feasible when $1,000 can buy 10 quadrillion cps.

Moore’s Law, the historically reliable doubling of computing power roughly every two years, indicates exponential hardware advancement. Relating this to Kurzweil’s metric, we currently achieve about 10 trillion cps per $1,000, aligning with predicted exponential growth:

Graph depicting the exponential growth of computing power over time, measured in calculations per second per $1000. It illustrates the rapid increase in affordable computing capabilities, approaching human brain-level processing power.

For $1,000, computers now exceed mouse brain capacity and are about one-thousandth of human capacity. While seemingly small, consider that in 1985, we were at one-trillionth human capacity, one-billionth in 1995, and one-millionth in 2005. Reaching one-thousandth in 2015 puts us on track for affordable, brain-level hardware by 2025.

Thus, the raw computational power for AGI is technically available now, and affordable, widespread AGI hardware is likely within a decade. But, computational power alone isn’t intelligence. The next challenge is imbuing this power with human-level intelligence. But wait, but why is software the harder part?

Second Key to Creating AGI: Making It Smart – The “But Wait But Why” of Software

This is the more complex challenge. We don’t yet know how to create truly intelligent AI. Developing software that replicates human-level general intelligence remains an unsolved problem. However, various promising strategies are being explored, and eventually, one will succeed. Here are three prominent approaches, and the “but wait but why” behind each:

1) Plagiarize the Brain: The “But Wait But Why” of Reverse Engineering

This approach is akin to copying a successful student’s answers when struggling with a difficult test. We’re trying to replicate the brain’s architecture and functionality, recognizing it as a proven model of intelligence.

Scientists are actively reverse-engineering the brain. Optimistic estimates suggest we could achieve this by 2030. Understanding the brain’s mechanisms could provide blueprints for creating highly efficient and powerful AI. Artificial neural networks are an example of brain-inspired computer architecture. These networks, composed of transistor “neurons,” initially know nothing. They learn through trial and error, adjusting connections based on feedback. For example, in handwriting recognition, initial attempts are random. Correct guesses strengthen the responsible neural pathways; incorrect ones weaken them. Through repeated training, the network develops intelligent pathways, becoming proficient at the task. The brain learns similarly, albeit more sophisticatedly. Brain research continues to reveal innovative ways to leverage neural circuitry for AI.

A more ambitious approach is “whole brain emulation.” This involves slicing a brain into thin layers, scanning each layer, and using software to reconstruct a 3D model. Implementing this model on a powerful computer could potentially create a computer capable of all brain functions. With sufficiently precise emulation, a brain’s personality and memories might be preserved after “uploading.” Imagine emulating Jim’s brain just after his death – the computer could “wake up” as Jim, a fully functional AGI, ready to be potentially upgraded to ASI.

How close are we to whole brain emulation? Recently, we emulated the brain of a 1mm-long flatworm with 302 neurons. The human brain has 100 billion neurons. While this seems daunting, exponential progress is key. Having emulated a worm brain, an ant brain might follow, then a mouse brain, making human brain emulation increasingly plausible. But wait, but why not try a different approach?

2) Mimic Evolution: The “But Wait But Why” of Evolutionary Algorithms

If copying the brain directly is too challenging, we can try to replicate the process that created the brain: evolution. Evolution has already proven that creating brain-level intelligence is possible.

Instead of directly engineering AGI, we can simulate evolution using “genetic algorithms.” This involves repeated cycles of performance and evaluation, mimicking biological natural selection. A population of computer programs would attempt to perform tasks. The most successful programs would be “bred” by merging portions of their code to create new programs, while less successful ones are eliminated. Over numerous iterations, this process, driven by natural selection, would progressively improve the programs. The challenge lies in creating an automated evaluation and breeding cycle that drives this evolutionary process autonomously.

The downside is evolution’s timescale – billions of years. We aim for decades. However, we have advantages over natural evolution. Evolution is undirected and random, producing many unhelpful mutations. We can guide the process, focusing on beneficial changes. Evolution doesn’t specifically target intelligence; environments might even select against it. We can directly target intelligence as the goal. Evolution also innovates across many domains (energy production, etc.) to support intelligence. We can focus solely on intelligence, leveraging existing technologies like electricity. While evolution is slow, our directed, accelerated approach might be significantly faster. But wait, but why not let the computers solve this themselves?

3) Self-Improving AI: The “But Wait But Why” of Recursive Improvement

This is a more radical, and potentially most promising, approach. Instead of directly programming AGI or mimicking evolution, we can create AI systems designed to improve themselves.

The idea is to build AI whose primary skills are researching AI and modifying its own code. This AI would not just learn but also enhance its own architecture. We would essentially teach computers to be computer scientists, enabling them to bootstrap their own development. Their primary task would be to make themselves smarter. We’ll delve deeper into this concept later.

The Imminent Arrival of AGI: The “But Wait But Why” of Rapid Progress

Rapid hardware advancements and innovative software experiments are converging, suggesting AGI might emerge sooner and more suddenly than anticipated. Two key factors contribute to this potential for rapid, unexpected progress, answering the question: “but wait but why might AGI arrive so quickly?”:

- Exponential Acceleration: Exponential growth is powerful. Seemingly slow progress can rapidly accelerate. This GIF illustrates this concept effectively:

[Animated GIF demonstrating exponential growth. A small square doubles in size repeatedly, quickly overtaking the entire grid, visually representing how exponential growth can lead to rapid and dramatic changes.] Source

- Software Epiphanies: Software progress can be punctuated by sudden breakthroughs. Like the shift from a geocentric to a heliocentric universe view, a single insight can dramatically accelerate progress. In self-improving AI, a minor system tweak could lead to a massive leap in effectiveness, propelling it toward human-level intelligence much faster than expected.

The Road From AGI to ASI: The “But Wait But Why” of Superintelligence

Imagine we achieve AGI – computers with human-level general intelligence. Will we enter an era of peaceful coexistence between humans and machines? But wait, but why is this scenario unlikely?

AGI, even at human-level intelligence, would possess significant advantages over humans:

Hardware Advantages:

- Speed: Brain neurons fire at a maximum of 200 Hz. Today’s microprocessors (and future AGI-era processors) operate at GHz speeds, millions of times faster. Brain communication at 120 m/s is dwarfed by computers’ optical speed-of-light communication.

- Scale and Memory: Brain size is limited by skull dimensions and communication delays. Computers can scale physically, allowing for vastly greater hardware, RAM, and long-term storage with superior capacity and precision compared to human memory.

- Reliability and Durability: Computer transistors are more accurate and less prone to degradation than biological neurons. They can also be repaired or replaced. Human brains fatigue; computers can operate continuously at peak performance, 24/7.

Software Advantages:

- Editability and Upgradability: Unlike the relatively fixed human brain, computer software is easily updated, modified, and improved. Upgrades can target areas where human brains are weak. Human vision is excellent, but complex engineering is not. Computers could match human vision and become equally optimized in engineering and other domains.

- Collective Intelligence: Humanity excels at collective intelligence through language, communities, writing, printing, and now the internet. Computers will be far superior at this. A global network of AI could instantly synchronize learning across all nodes. They could also act as a unified entity, without human-like disagreements or self-interest. But wait, but why will this lead to superintelligence so quickly?

AI, particularly self-improving AI, wouldn’t view human-level intelligence as a final goal. It’s just a milestone from our perspective. AGI wouldn’t “stop” at our level. Given its inherent advantages, it would quickly surpass human intelligence.

This transition might be profoundly surprising. We perceive animal intelligence as vastly lower than ours, and the range of human intelligence, from the least to the most brilliant, as significant:

Diagram illustrating the perceived spectrum of intelligence, showing a wide gap between animal and human intelligence, and a significant range within human intelligence itself.

As AI intelligence grows, we might initially see it as merely “smarter than animals.” When it reaches the level of “village idiot” human intelligence (Nick Bostrom’s term), we might think, “Cute, like a dumb human!” However, in the grand spectrum of intelligence, the entire human range, from “village idiot” to Einstein, is incredibly narrow. Just after reaching “village idiot” level AGI, it could rapidly become smarter than Einstein, catching us completely off guard:

Diagram correcting the perception of the intelligence spectrum. It shows that the entire range of human intelligence occupies a tiny band compared to the potential spectrum, and especially in contrast to the potential intelligence of ASI.

And then what? What comes after surpassing Einstein?

An Intelligence Explosion: The “But Wait But Why” of Unprecedented Change

Normal time, as we know it, is about to become unnormal. The following concepts are based on real science and forecasts from leading thinkers and scientists.

As mentioned, most AGI development models rely on self-improvement. Even AGI systems developed without self-improvement capabilities would be intelligent enough to develop them if desired.

This leads to recursive self-improvement. An AI at a certain intelligence level (say, “village idiot”) is programmed to improve its own intelligence. Once it does, it becomes smarter – perhaps at Einstein’s level. With Einstein-level intellect, improving itself becomes easier, leading to even greater leaps. These leaps accelerate, rapidly pushing the AGI to superintelligence – ASI. This is the Intelligence Explosion, the ultimate manifestation of the Law of Accelerating Returns.

The timeline for reaching AGI is debated. A survey of hundreds of scientists suggested a median estimate of 2040 for a greater than 50% chance of AGI – just 25 years away. Many experts believe the transition from AGI to ASI could be rapid. Imagine:

After decades, AI finally reaches low-level AGI, understanding the world like a four-year-old. Within an hour, it produces a grand unified theory of physics, something humans haven’t achieved. Ninety minutes later, it becomes ASI, 170,000 times more intelligent than a human.

Superintelligence of this magnitude is incomprehensible to us, just as Keynesian economics is to a bumblebee. Our intelligence spectrum is narrow; we lack words for IQs of 12,952.

Human dominance on Earth demonstrates a clear principle: intelligence equates to power. ASI, once created, will be the most powerful entity on Earth. All life, including humanity, will be at its mercy. And this could happen within decades.

If our limited brains invented wifi, an entity 100, 1,000, or a billion times smarter should effortlessly control matter and energy, achieving anything we consider magical or godlike. Reversing aging, curing diseases, eliminating hunger, achieving immortality, controlling weather – all become potentially mundane tasks for ASI. But so does the potential for immediate global destruction. With ASI, an omnipotent force arrives on Earth. The crucial question becomes:

Will it be a nice God?

This is the central question explored in Part 2 of this post.

(Sources are listed at the bottom of Part 2.)

Related But Wait But Why Posts

The Fermi Paradox – Why haven’t we detected signs of alien life?

How (and Why) SpaceX Will Colonize Mars – Collaboration with Elon Musk on the future of space colonization.

For a different perspective: Why Procrastinators Procrastinate

_______

Stay updated with Wait But Why by joining our email list for new post notifications.

Support Wait But Why on our Patreon page.

About Tim Urban

View all posts by Tim Urban →

Previous Post

Next Post